Introduction

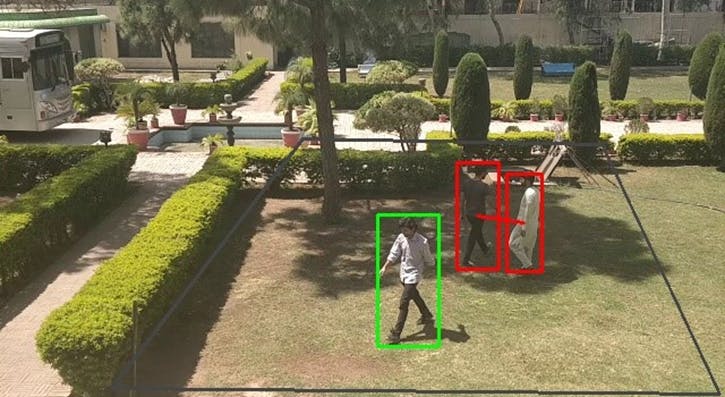

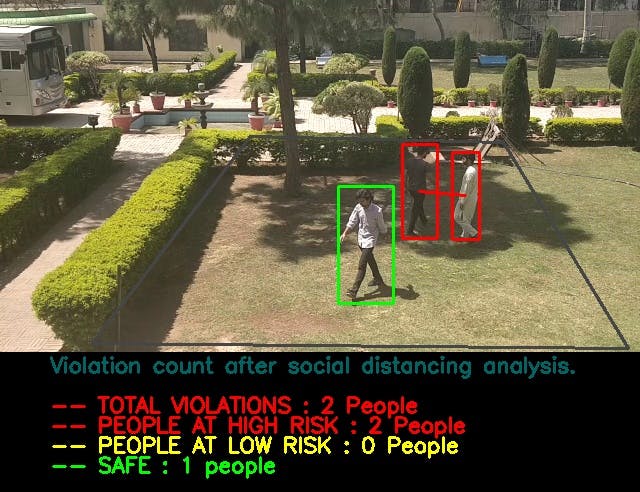

Due to COVID-19, The need of social distancing is mandatory to control the spread of the disease. People are advised to limit their interactions with other people as much as they can to reduce the chance of spreading the covid-19 virus with physical or close contact. In this system we will see how python, computer vision and deep learning model can be used to monitor social distancing at public places and workplaces or education institutes. To make sure the people follow social distancing protocols in crowded places, A real time social distancing detection software that can monitor if people are following the social distancing protocols by taking real time video streams from the camera. Social distancing violation detection offers an opportunity for real-time measuring and analyzing the physical distance between pedestrians using real time videos in public spaces. Our solution focuses on detecting people through real time camera feeds and detect the social distancing violations by calculating the distance between people in real time. Our system will also give real time results by pointing out violators in real time and forming red bounding boxes around them. A violation threshold is defined and when the number of violations are exceeded from that 180cm threshold then yellow bounding box will appear on that person and if two persons further get closer to each other then red bounding box will appear.

NOTE: I have provided some functions from the code so that you can get the idea of the basic working.

Solution

Project Phases

- Detection

- Perspective Transformation

- Bird-eye Generation

- Distance Estimation

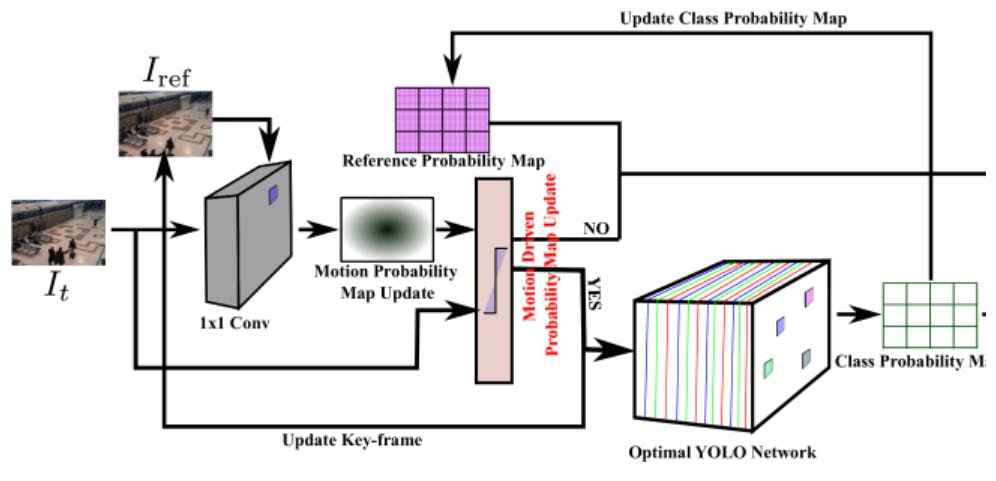

Detection:

YOLOV4 is real time object detection model that was published in 2020 which achieved state of the art performance on COCO dataset. The main working if YOLOV4 is that it breaks down the object detection tasks into two pieces by using regression to identify object positioning through bounding boxes and classification to identify class of object. YOLOv4 is the most efficient and state of the art deep CNN model that uses several layers to identify and detect objects. it is one of the most optimized model for real time detections[17]. We used YOLO to detected people from the video frame YOLO returns us a list of dimensions of detected objects along with the height and width of the bounding boxed that it makes around the detected objects.

Code for detection

import cv2

import numpy as np

'''In this file we are detecting persons using yolov4 algorithm'''

confid = 0.7

thresh = 0.5

# Function to detect persons

def detect_pedestrians(frame, net,ln1,H,W):

blob = cv2.dnn.blobFromImage(frame, 1 / 255.0, (416, 416), swapRB=True, crop=False)

net.setInput(blob)

# start = time.time()

layerOutputs = net.forward(ln1)

# end = time.time()

boxes = []

confidences = []

classIDs = []

for output in layerOutputs:

for detection in output:

scores = detection[5:]

classID = np.argmax(scores)

confidence = scores[classID]

# filtering out only persons in frame

if classID == 0:

if confidence > confid:

box = detection[0:4] * np.array([W, H, W, H])

(centerX, centerY, width, height) = box.astype("int")

x = int(centerX - (width / 2))

y = int(centerY - (height / 2))

boxes.append([x, y, int(width), int(height)])

confidences.append(float(confidence))

classIDs.append(classID)

idxs = cv2.dnn.NMSBoxes(boxes, confidences, confid, thresh)

font = cv2.FONT_HERSHEY_PLAIN

boxes1 = []

for i in range(len(boxes)):

if i in idxs:

boxes1.append(boxes[i])

x, y, w, h = boxes[i]

return boxes1

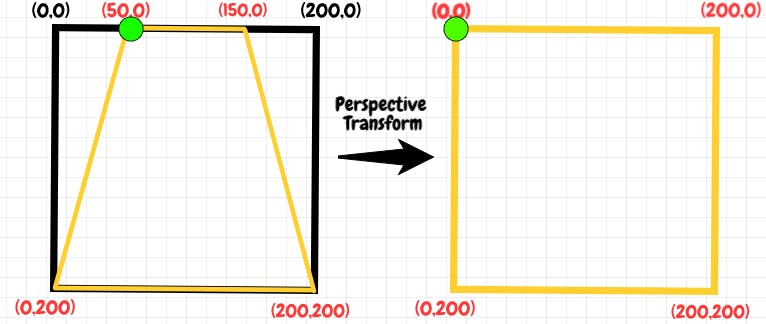

Perspective Transformation:

After Detection of people using YOLOv4 the next step was to estimate the distance between the detected objects[18]. For this we used an OpenCV[16],[13] library Perspective Transformation. Calculating the distance from a 2D image in not possible as there is no information about how far or close an object is from the camera and how close or far two objects are from each other if they are standing the same line. To handle this issue we used perspective transformation.In Perspective Transformation, we can change the view of a given image or video to get better information on the required information.In Perspective Transformation[20], we need to give points in the picture where we want to gather information by changing perspective. We also need to give points inside when we want to express our image. Then, we get a point of view from two sets of given points and wrap it up with the original image. It gives us a over head view like we get from a drone so we could accurately estimate the distance between two objects.ROI and detected centroids will be transformed in the end.

Code for perspective Transformation

def Roi_transformation(points):

source = np.float32(np.array(points[:4]))

destination = np.float32([[0, H], [W, H], [W, 0], [0, 0]])

perspective_transform = cv2.getPerspectiveTransform(source, destination)

return perspective_transform

transformed_roi = Roi_transformation(points)

output_list = yolo.detect_pedestrians(frame, net,ln1,H,W)

def get_transformed_points(output_list, transformed_roi):

#centroid points list initialized here

centroid_points = []

for box in output_list:

points = np.array([[[int(output_list[0]+(output_list[2]*0.5)),int(output_list[1]+output_list[3])]]] , dtype="float32")

# here perspective transformation is performed on all detected persons

bd_pnt = cv2.perspectiveTransform(points, prespective_transform)[0][0]

pt = [int(bd_pnt[0]), int(bd_pnt[1])]

centroid_points.append(pt)

return centroid_points

Original Frame:

Transformed Frame:

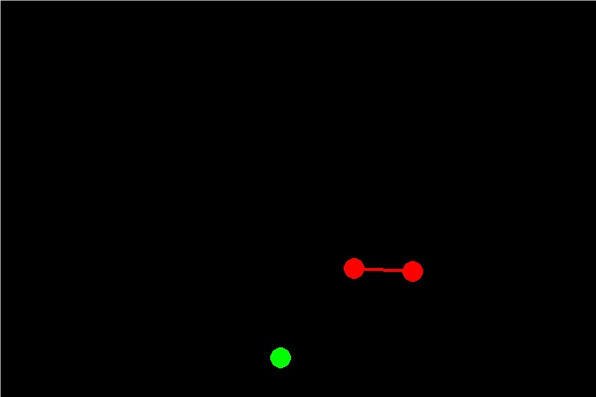

Bird Eye View Generation:

Bird-eye view is an over head drone like view of a picture or a video. After the prospective transformation we have the transformed dimensions of the detected objects now we need to plot those dimensions on a plane. For that we used red and green dots and lines to show violators and people at safe distance from each other.

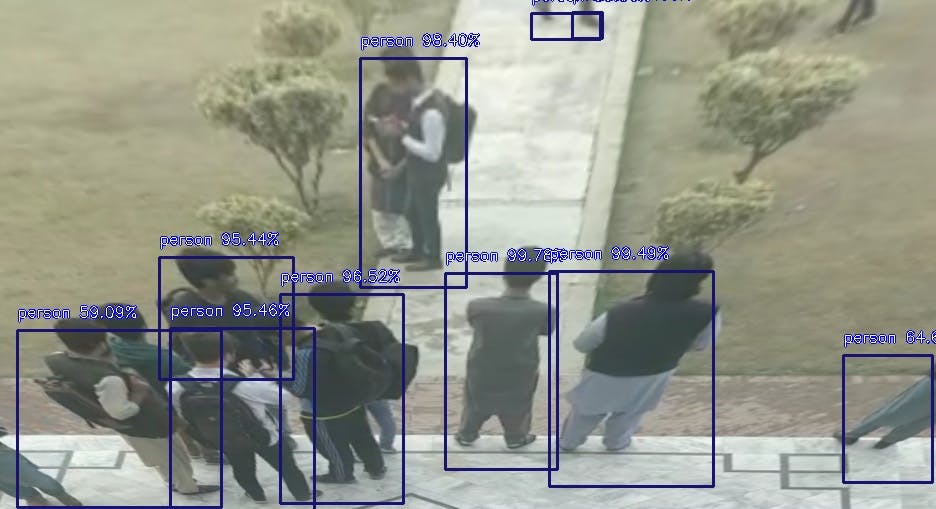

Original Frame:

Bird Eye View:

Distance Estimation:

After performing pedestrian detection we are going to calculate the distance between the people using perspective transformation. This is our novel approach of calculating distance as bird eye transform the detected objects on 2D plane from which distance can be easily calculated by using any suitable formula. Once the detected objects are plotted on 2d plane based on their centroids then we used the Pythagoras theorem to calculate distance between objects. Code for distance estimation

def cal_distance(p1, p2, distance_w, distance_h):

#Here distance_w and distance_h are the two pixel to cm ratio points on image

h = abs(p2[1]-p1[1])

w = abs(p2[0]-p1[0])

dis_w = float((w/distance_w)*180)

dis_h = float((h/distance_h)*180)

return int(np.sqrt(((dis_h)**2) + ((dis_w)**2)))

Results

Final Output Frame:

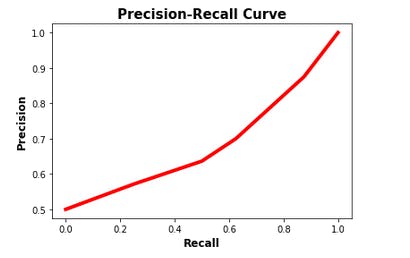

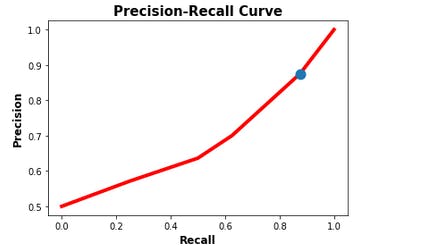

Model Evaluation

We selected YOLOv4 for this project because of its better accuracy and most importantly its capability of detecting objects better in real time which is more important to us as our project works in real time.Here we computed the precision recall curve of our model based on ground truth values and predictions it makes.As our model needs threshold to classify between objects we have generated list of different thresholds and calculated precision and recall values at different threshold values.Increasing precision and recall values show better performance of model.

The purpose of this curve is to identify best threshold value to balance precision and

recall value. here the blue dot represents the values of precision and recall as 0.87 and

0.83 respectively at threshold value of 0.6

The purpose of this curve is to identify best threshold value to balance precision and

recall value. here the blue dot represents the values of precision and recall as 0.87 and

0.83 respectively at threshold value of 0.6

Problems Faced

Distance From 2D image The problems we faced during this project was calculating distance from a 2D image. In a 2D image there is no information about depth so we can not differentiate how much close or far an object is from the camera. For that we did perspective transformation that gave us an over head view which normalized the depth factor. Problem in Perspective Transformation The other problem that that aroused next was if we detect the people in the frame first and then do the perspective transformation then in the transformed frame the position of those people will most likely be changed so the dimensions of detected people given by YOLO would not be accurate. And we could not perform perspective transformation first and then detect people from that frame because it would effect YOLO accuracy because in the perspective transformed frame the image is stretched so miss detections are possible. To address this problem we performed the detection first and then the perspective transformation and then the dimensions we received from YOLO we did perspective transformation of those points as well to match it with the perspective transformed output frame results. Camera Panning Problem Camera panning problem is that when we move the camera from left to right or vice versa the whole frame of the camera is disturbed which also disturbs the ROI(region of interest). To solve that we made the ROI (region of interest) static so it would move with the camera angle.

Conclusion

This is a real time surveillance system which detects any social distancing violation and shows results in real time. It can be used in public spaces to ensure safety protocols. Furthermore it can be implemented on a normal CCTV camera feed it does not require any hardware which makes it cost efficient to implement.

Future Work

Future experiments to improve our approach include re-training the model with video feeds from the deployment region as well as testing the performance with newer detection algorithms. Same person tracking in different camera at the same time can be resolved. In addition to that auto selection of ROI (Region of Interest) can be done using computer vison.